The Excel Effect

In the 1980s, when they were first introduced, spreadsheets were a novelty. VisiCalc pioneered the flexible row-and-column software category first, but they were quickly eclipsed by the still dominant Microsoft Excel. Spreadsheets could solve practically any problem faced by financial analysts in a fraction of the time that was required using paper, pencil, and an HP 12C calculator.

Imagine an alternative history where, instead of finance departments using Excel all day to solve all kinds of problems, they instead worked with partners who were Excel experts. It’s not as crazy as it sounds; I remember my father—an executive raised on pen and paper—working with a consultant whose superpower was building Excel models. It seems likely that in this world, financial analysis would be slow and frustrating, and that analysts wouldn’t understand their work as well.

Today, practically everyone in the enterprise uses Excel. Some use it better than others, but everyone can open a spreadsheet, write a formula, and do basic analysis. This has been true for decades.

Craft versus Skill

However, the data that employees have to deal with today has gotten much bigger—and more complex— in the roughly 30 years since the widespread adoption of Excel. Software has automated practically all of an enterprise’s functions, which has in turn generated orders of magnitude more data. Perhaps the biggest source of new data has been the vast digital marketing ecosystem, which generates billions of rows of interactions per day at large companies. This means that more and more information is now stored in countless complex databases, warehouses, and lakes, and has made spreadsheets an increasingly unsuitable tool for analysis.

Fortunately, the data analysis toolkit has gotten far more robust to meet the data exhaust challenge. Open-source data science libraries provide elegant solutions to seemingly any statistical or data visualization problem, while cloud-based compute and storage have reached the scale and speed levels required to cost cost-effectively deal with billion row data frames. It is now possible for a “citizen data scientist”—a pejorative term I’ve never liked for reasons I will elucidate below in the Guild section—to basically do anything they want to.

However, the Excel effect—where employees adopt new tools themselves to superpower their work—has not really happened with these new tools. Instead, “analytics” is mostly outsourced by craft experts to skill experts, whether inside or outside of a team. A craft expert is someone who fully understands how their part of the business works, while a skill expert is someone who can operate a particular piece of technology. This siloing has had the unfortunate effect of creating a lot of lousy output—slow, inaccurate, and in some cases not even answering the right questions.

However, there is evidence that we are getting to another “Excel revolution” tipping point. New integrated cloud solutions like Databricks integrate storage, compute, development environments, and source control in one place, while AI copilots and massively open online courses (MOOCs) have made learning Python, SQL, and R straightforward.

Down with Guilds

So what is stopping teams from superpowering themselves and owning and analyzing their own data? Certainly, there is a lagging skill issue. Excel’s successors are more complicated and require more expertise to run, and many employees are simply unwilling or unable to be reskilled. However, I think that the main reason business teams remain reluctant to become analytical is internal political pressure from entrenched centers of excellence exhibiting guild behavior.

Guild behavior is understandable; people have spent years training to acquire an arcane skill do not want to see their market flooded with competition, and so they tend to jealously guard their profession, through certification or simple bullying. Guilds exist all over today’s economy—including in medicine and law. However, they shouldn’t exist inside enterprises. Leaders should be very wary of centers of excellence that claim that what they do can “only be done by us.”

Specialized departments that are not core to the business should exist as enablers—not as blockers. Today, many functional departments are being held hostage by experts who have forgotten their mandate to serve and instead seem to focus endlessly on all the wrong things. I’m not saying that these departments shouldn’t exist—but they need to remember their mandate.

Marketer, Heal Thyself

Marketing might be the most egregious example of craft versus skill siloing in the entire enterprise. Marketing has become technical over the past two decades. Digital data, martech software, attribution modeling, customer and audience segmentation and insights, and propensity to buy are not nice-to-have; they these are marketing’s primary use cases.

And yet, marketing departments remain siloed, in some cases with marketing analytics separate from “execution” groups, and in an even more extreme model, outsourcing anything technical to general “analytics centers of excellence.” It is time to start transitioning away from these models, and to make marketing itself analytical. Concretely, marketing teams should own their data, tools, and analysis, in the same way that accounting teams own the general ledger and finance teams own forecasts and cap tables. Most specifically, if marketing is truly the customer customer-facing aspect of the enterprise, then they should own customer data.

Of course, there will always be newer technologies that require specialized skills. Econometric modeling, for example, the method behind mixed media modeling (MMM,) demands statistical expertise and experience. However, writing SQL queries to derive dataframes that get to the bottom of why a set of customers are defecting at higher rates is not, as of 2025, something that requires a special department.

The same goes for ad hoc analysis using Python and R. AI copilots have made code writing pretty straightforward. Marketers who understand the basics of objects, functions, and loops should be able to quickly piece together analysis notebooks themselves—and doing so will make them much more intimate with their data and their customers.

Federated Data

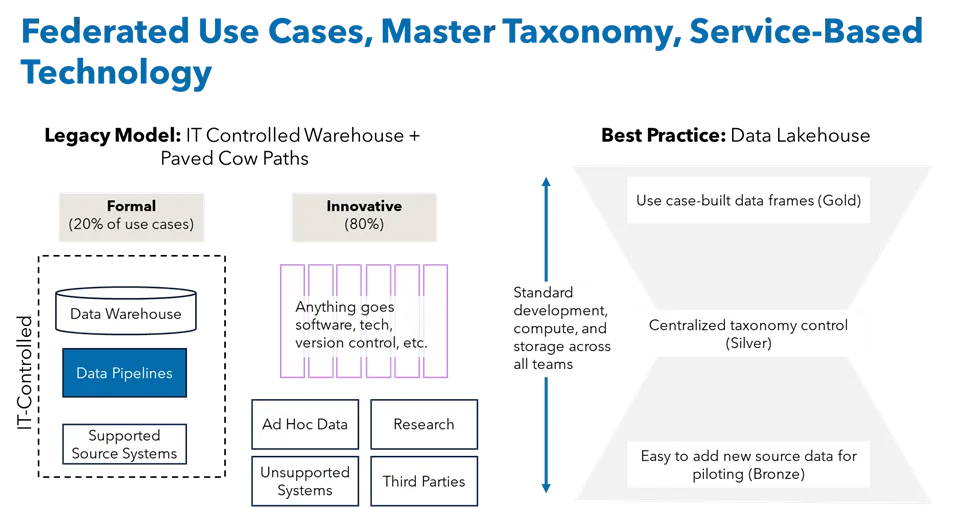

Marketing data remains a more complex issue than analytical tooling; we will never get to a place where data are fully democratized, nor do we want to. Without steady ground, no one will ever agree on the truth. However, too much focus on control leads to sclerosis and “paved cow paths” around formal data flows.

Figure 1: In the old model, IT controlled data, and while it was accurate, it failed for many use cases, leading to paved cow paths. A more modern medallion approach brings raw data together in one place; standardizes “truth” in the middle, and lets business experts create their own “gold” dataframes (or views.)

Fortunately, the medallion data model provides a helpful roadmap for balancing centralization with agility. The model, shown in Figure 1, can be adapted to many use cases, but the basic idea is that raw data can be ingested easily with little control; silver data is processed and provides a “truth substrate” that multiple teams can work from; and gold data are processed maximally for specific use cases—for example, in the case of the MMM example used above, into an econometric time series data frame.

In this model, IT or the Data Team still play two critical roles: They provision the storage, compute, and development environment for the business users; and they coordinate the metadata and taxonomy standardization required to do the categorical analysis that marketers do day in and day out —for example, naming channels consistently, or calling customer segments the same things. However, IT also recognizes that they need to step back when it comes to marketers adding the bronze (raw) data they need for ad hoc purposes, and in creation of the gold data frames that will power the expert use cases that make the business run.

An Analytical Marketing Team

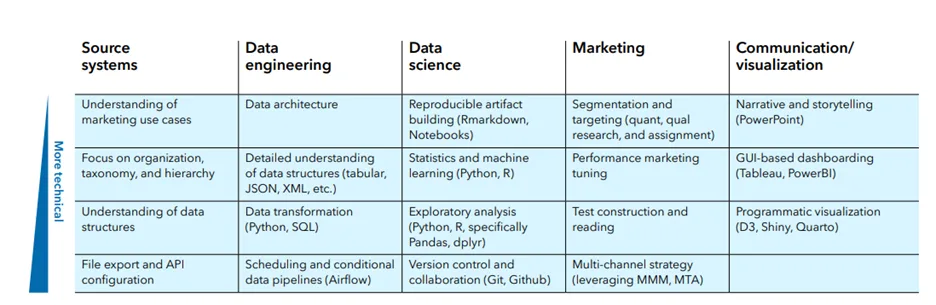

The analytical marketing team should be made up of swiss army knife types—employees who don’t just understand but can work with the technology of marketing. Concretely, this means a grasp of source systems (Martech); the data itself; data science tools; and, of course, the typical marketing superpowers of segmentation, testing, channel strategy, and creative storytelling.

This means changes to hiring and training. Many marketing majors lack analytical tooling, so these employees will need major technical upskilling. Another approach is teaching more technical individuals marketing fundamentals. Either can work.

Guiding Principles

A marketing leader who wants to merge craft and skill can start with a few guiding principles:

- Environment

a. Data, compute, and development should all happen in one place…

b. …but this means that the team provisioning the environment needs to focus on responsiveness

c. Martech should be owned by marketing, and marketers should be the experts in these systems - Data

a. It should be easy to add new data sources as Bronze assets…

b. …but taxonomy, metadata, and quality should be centralized for Silver assets…

c. …and experts should drive development of Gold assets that are fit-for-purpose - People

Marketers should increasingly be analytical , and marketing data and analytics teams should be increasingly integrated into marketing generally - The Work

All marketing data and analytics projects should be “use-case first”: what will this accomplish? (In Agile lingo, “user stories”)

These all boil down to a single principle: combining craft with skill to create a team of generalists. This might sound counterintuitive; “generalist” can be seen as a demeaning term. However, flip this on its head to the optimistic version of the word—polymath or renaissance man (person.) This describes the ideal marketer to a tee—someone who is both a jack of all trades, and a master of many.

Download the whitepaper, “Building a composable go-to-market data stack”

Rethink your data foundation and lead the next era of AI-ready, insight-driven marketing.